Applying The Circuit Breaker Pattern at HMH

Introduction

MicroServices architectures have been widely adopted within HMH, specifically for the following characteristics:

- Separation of concerns with less coupling

- Modularity

- Service to service communication via well-defined REST APIs

- Reusability

- Independent CI/CD pipeline for deployment

- Enhanced business agility via fast development and deployment

The microservice architecture can be vulnerable because each user action results invokes multiple services. It replaces the in-memory calls of a monolithic architecture with multiple remote calls across the network. This works well when all services are up and running, but when one or more services are unavailable or exhibiting high latency it could result in cascading failures across the enterprise. Service client retry logic only makes things worse and can bring whole application down.

All services will fail or falter at some point in time. The Circuit Breaker pattern helps to prevent catastrophic cascading failures across multiple systems. The Circuit Breaker pattern allows you to build a fault-tolerant and resilient system that can survive gracefully when key services are either unavailable or have high latency.

Circuit Breaker

In MicroServices world, there are lots of cases where service A would call service B via REST APIs across the network for certain data, as illustrated below:

Service A sends Service B a request via REST API across the network and Service B responds to the request successfully within a reasonable time (aka, in ms).

When Service B is down, Service A should still try to recover from this and try to do one of the following:

- Custom Fallback: try to get the same data from some other source. If this is not possible, use its own cache value.

- Fail fast: If Service A knows that Service B is down, there is no point waiting for the timeout and consuming its own resources.

- Heal automatic: Periodically check if Service B is working again and try to send the request again.

- Other APIs should work: All other APIs which depend on Service A should continue to work.

Circuit Breaker States

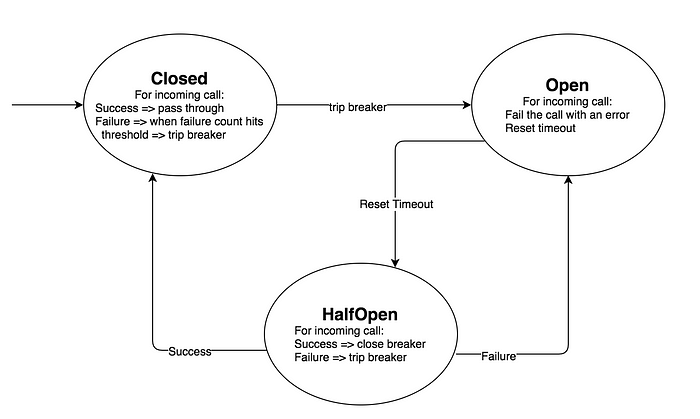

The Circuit Breaker concept is simple, it has the following three states:

- Closed — When everything is normal, the circuit breaker remains in the closed state and all calls pass through to the services. When the number of failures exceeds a predetermined threshold the breaker trips, and it goes into the Open state.

- Open — The circuit breaker returns an error for calls without executing the function.

- HalfOpen — After a timeout period, the circuit switches to a halfopen state to test if the underlying problem still exists. If a single call fails in this halfopen state, the breaker is once again tripped. If it succeeds, the circuit breaker resets back to the normal closed state.

The following diagram illustrates the state transition,

Circuit Breaker State Behavior

Now let’s examine the service call behavior in each of the states.

Circuit Breaker in Closed State

In this state, all calls go through and responses come back without any latency.

Circuit Breaker in Open State

If the Service B is experiencing slowness, the circuit breaker receives timeouts for any requests to that service. Once the number of timeouts reaches a predetermined threshold, it trips the circuit breaker to the Open state. In the Open state, the circuit breaker returns an error for all calls to the service, without making the calls to Service B. This behavior allows the Service B to recover by reducing its load.

Circuit Breaker in HalfOpen State

This state is used to know if Service B is recovered. Circuit Breaker uses this mechanism to make a trial call to Service B periodically to check if it has recovered. If the call to Service B Microservice times out, the circuit breaker remains in the Open state. If the call returns successfully, the circuit switches to the Closed state. The circuit breaker then returns all the incoming calls to the service B with an error during the HalfOpen state.

Implement Circuit Breaker Using Spring Cloud Netflix Hystrix

There are many ways to implement the Circuit Breaker pattern in the application. At HMH our microservices are built with Spring Boot, and it’s a natural fit to use Spring Cloud Netflix Hystrix for the implementation. For more information on implementing a circuit breaker, refer to Spring’s tutorial and guide.

Leverage Spring Retry Framework

As you see above, a retry mechanism is needed to periodically send the request to the target service to see if it recovers. Spring offers a retry mechanism which is easy to set up with a RetryTemplate where you can define the number of retries, retry internal and messages easily. For details refer to Spring Retry documentation.

Example of Combining Spring Circuit Breaker and Retry

Based on the Spring circuit breaker tutorial, we show an example to configure Spring Retry via using RetryTemplate below.

First, in your Spring Application add the following annotations. If you followed the above tutorial, you added those annotations in ReadingApplication class:

@EnableCircuitBreaker

@RestController

@SpringBootApplication

public class ReadingApplication {

...

}Next, set up a RetryTemplate. You can either put in your AppConfig where you define your RestTemplate or put in your Application class; with the above example, we put in ReadingApplication class:

@Bean

public RetryTemplate retryTemplate() {

RetryTemplate retryTemplate = new RetryTemplate();

FixedBackOffPolicy fixedBackOffPolicy = new FixedBackOffPolicy();

fixedBackOffPolicy.setBackOffPeriod(2000); // retry interval 2000ms

retryTemplate.setBackOffPolicy(fixedBackOffPolicy);

SimpleRetryPolicy retryPolicy = new SimpleRetryPolicy();

retryPolicy.setMaxAttempts(3); // retry 3 times

retryTemplate.setRetryPolicy(retryPolicy);

retryTemplate.registerListener(new DefaultListenerSupport()); // hook in a Listener on Circuit Breaker State change event, see below for details

return retryTemplate;

}For this example, we used SimpleRetryPolicy, and you can also use much more sophisticated policy such as ExponentialBackOffPolicy for your application needs.

Next, set up the Circuit Breaker and Retry mechanism and fall back operation. Again with the above example, you do this in BookService class.

Rewrite the existing readingList() method as below:

@CircuitBreaker(include = { Exception.class }, openTimeout = 10000, resetTimeout = 30000, maxAttempts = 1)

public String readingList() {

retryCount = 1;

return retryTemplate.execute(new RetryCallback<ResponseEntity<String>, RuntimeException>() {

@Override

public ResponseEntity<String> doWithRetry(RetryContext arg0) throws RuntimeException {

logger.info("Retry.................... No. " + retryCount++);

return new ResponseEntity<String>(callingDownStreamService(), HttpStatus.OK);

}

}).getBody();

}Also, introduce a private method:

private String callingDownStreamService() {

URI uri = URI.create("http://localhost:8090/recommended");

return this.restTemplate.getForObject(uri, String.class);

}Define the fallback method, annotated with Recover annotation:

@Recover()

public String reliable() {

return "Cloud Native Java (O'Reilly)";

}The above fall back method basically returns a default reading list. In case you don’t know the default, it’s fine to throw a service temporary Unavailable Exception (HTTP code 503).

As mentioned above, you can register a callback listener on the Circuit Breaker’s state change event. Listener provides additional callbacks upon retries. They can be used for various cross-cutting concerns across different retries. An example is given below,

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.retry.RetryCallback;

import org.springframework.retry.RetryContext;

import org.springframework.retry.listener.RetryListenerSupport;

public class DefaultListenerSupport extends RetryListenerSupport {

private static final Logger logger = LoggerFactory.getLogger(DefaultListenerSupport.class);

@Override

public <T, E extends Throwable> void close(RetryContext context, RetryCallback<T, E> callback, Throwable throwable) {

logger.info("onClose");

super.close(context, callback, throwable);

}

@Override

public <T, E extends Throwable> void onError(RetryContext context, RetryCallback<T, E> callback, Throwable throwable) {

logger.info("onError");

super.onError(context, callback, throwable);

}

@Override

public <T, E extends Throwable> boolean open(RetryContext context, RetryCallback<T, E> callback) {

logger.info("onOpen");

return super.open(context, callback);

}

}The open and close callbacks come before and after the entire retry, and onError applies to the individual RetryCallback calls.

With this, when the circuit is Open, and when you hit localhost:8080/to-read endpoint you will see the following on the console:

2019-03-03 11:29:19.981 INFO 99774 --- [nio-8080-exec-4] listener.DefaultListenerSupport : onClose

2019-03-03 11:29:19.983 INFO 99774 --- [nio-8080-exec-4] hello.BookService : Retry.................... No. 1

2019-03-03 11:29:19.991 INFO 99774 --- [nio-8080-exec-4] listener.DefaultListenerSupport : onError

2019-03-03 11:29:24.995 INFO 99774 --- [nio-8080-exec-4] hello.BookService : Retry.................... No. 2

2019-03-03 11:29:24.996 INFO 99774 --- [nio-8080-exec-4] listener.DefaultListenerSupport : onError

2019-03-03 11:29:29.999 INFO 99774 --- [nio-8080-exec-4] hello.BookService : Retry.................... No. 3

2019-03-03 11:29:30.000 INFO 99774 --- [nio-8080-exec-4] listener.DefaultListenerSupport : onError

2019-03-03 11:29:30.000 INFO 99774 --- [nio-8080-exec-4] listener.DefaultListenerSupport : onOpenAfter the 3 retries, get the response back as below (via the fallback call):

Cloud Native Java (O'Reilly)If the target service recovered within the retry period, the successful response comes back as below:

Spring in Action (Manning), Cloud Native Java (O'Reilly), Learning Spring Boot (Packt)Parameterized Configuration Settings

It’s likely that we need to fine-tune some of the Circuit Breaker and retry parameters and make them configurable on various environments. Below shows an example of how to do this.

In the app’s application-{env}.yml file (where env could be local, dev, int or prod) you could add the following parameters

retry:

backoff: 5000

max-attempts: 3

circuit-breaker:

open-timeout: 10000

reset-timeout: 30000

max-attempts: 1In ApplicationConfig class, you can inject those values and even define defaults, like this:

@Value("${spring.retry.backoff:10}")

String backoffPeriod;

@Value("${spring.retry.max-attempts:1}")

String maxAttempts;

@Value("${spring.circuit-breaker.open-timeout:20}")

String openTimeoutCB;

@Value("${spring.circuit-breaker.reset-timeout:20}")

String resetTimeoutCB;

@Value("${spring.circuit-breaker.max-attempts:1}")

String maxAttemptsCB;And those parameters can be used in the related annotations for CircuitBreaker, like this:

@CircuitBreaker(include = { Exception.class },

openTimeoutExpression = "#{${spring.circuit-breaker.open-timeout}}",

resetTimeoutExpression = "#{${spring.circuit-breaker.reset-timeout}}",

maxAttemptsExpression = "#{${spring.circuit-breaker.max-attempts}}")Example for Using Hystrix Command

Rewrite the existing readingList() method as below:

@HystrixCommand(fallbackMethod = "reliable",

commandKey="readingList", commandProperties= {

@HystrixProperty(name="fallback.enabled", value="true")})

public String readingList() {

return retryTemplate.execute(new RetryCallback<ResponseEntity<String>, RuntimeException>() {

@Override

public ResponseEntity<String> doWithRetry(RetryContext arg0) throws RuntimeException {

return new ResponseEntity<String>(callingDownStreamService(), HttpStatus.OK);

}

}).getBody();

}Introduce a private method:

private String callingDownStreamService() {

URI uri = URI.create("http://localhost:8090/recommended");

return this.restTemplate.getForObject(uri, String.class);

}Parameterized Configuration Settings

It’s likely that we need to fine-tune some of the Circuit Breaker and retry parameters and make them configurable on various environments. Below shows an example of how to do this.

In the app’s application-{env}.yml file (where env could be local, dev, int or prod) you could add the following parameters,

retry:

backoff: 1000 # setting backoff higher would mean not tripping circuit breaker effectively.

max-attempts: 3

hystrix:

command:

default:

circuitBreaker:

requestVolumeThreshold: 20

errorThresholdPercentage: 50 # 50%

sleepWindowInMilliseconds: 5000 # 5s

execution:

isolation:

thread:

timeoutInMilliseconds: 10000 # 10s