Right-Sizing AWS RDS

Amazon Relational Database Service (Amazon RDS) has been the pioneer in managed Relational Databases in the cloud. RDS also consistently appears among the top AWS offerings based on popularity and usage, often trading the top 3 spots between Amazon EC2 and Amazon S3. And Why Not? RDS provides a wide spectrum of capabilities we look forward to in a managed relational database solution including, but not limited to dynamic capacity, patching, and backups allowing development teams to iterate faster, focus more on optimizing applications, and not to worry too much about the usual Database Administration tasks you would take on otherwise. RDS provides us with six database engines to choose from, including Amazon Aurora, PostgreSQL, MySQL, MariaDB, Oracle Database, and SQL Server. Reliable performance, high availability, and security are some of the core principles that RDS is built on.

Right sizing is the process of matching instance types and sizes to your workload performance and capacity requirements at the lowest possible cost. It’s also the process of looking at deployed instances and identifying opportunities to eliminate or downsize without compromising capacity or other requirements, which results in lower costs.

Source: AWS

Right-Sizing does require some level of effort ranging from understanding workloads and usage patterns (sawtooth or bursty, predictable, steady, etc.), storage requirements, redundancy and failover, multi-az deployments, cost, choosing between RDS vs Aurora, reads vs writes, and the list goes on…

The key to right-sizing is to understand precisely your organization’s usage needs and patterns and know how to take advantage of the elasticity of the AWS Cloud to respond to those needs.

Why Right-Size RDS?

Our motivation to right-size could simply be performance improvements or lowering cost or both. It is quite common for performance to be prioritized over cost, which can result in overprovisioned capacity and a lot of $$ spent on resources idling.

Right-Size is a continual process

Right-Sizing is an ongoing process and must evolve and mature alongside your application. There are plenty of reasons to keep up such as:

- Application architecture updates (New SQL patterns introduced by updates to the workload source code).

- Responding to changes in usage and workload patterns.

- A new generation of instance type/family available.

- A new engine or kernel upgrade.

- A database version upgrade.

You should check items 1 and 2 quite often. Once a sprint, once a month or whatever works for you. You should also monitor your RDS metrics and set alarms as appropriate.

You can look at items 3, 4, and 5 less frequently. Quarterly or yearly work well for many RDS users.

Getting Started

Often with the wide spectrum of choices available, the dilemma is where do you start? The answer to this is going to depend on whether you may be migrating your existing database to RDS (and that we understand the application and database characteristics), building from scratch, trying to improve your application performance and/or lower cost.

There aren’t any short-cuts “yet” or a magic wand for arriving at the best AWS RDS instance type or configuration for your application.

Migrating to RDS

The first step in any relational DB rightsizing project should be to optimize the schema, stored procedures, triggers, indices, and SQL that is used to access the DB. However because AWS allows you to change HW resources at will, you can migrate an unoptimized DB to AWS, optimize later, and re-evaluate your needs after the optimization step has been completed. Assuming we have metrics for workload, CPU, memory, IO, storage, and network we can certainly use the AWS Instance matrix to find a good match for where to start. Note that this does not substitute for testing and should be only considered to be a starting point that you could then iterate and arrive at the right-size.

Building from Scratch

Most RDS users starting from scratch look at Open Source engines such as Mysql, MariaDB, and PostgreSQL. You could also choose between the RDS flavor (Amazon RDS, Amazon Aurora, Aurora Serverless) that works best for your application. Starting with something reasonable for instance size (small to medium) for the application, implementing good monitoring, testing your workloads, and moving along the instance class matrix backed by metrics will be a good strategy.

Improving Performance

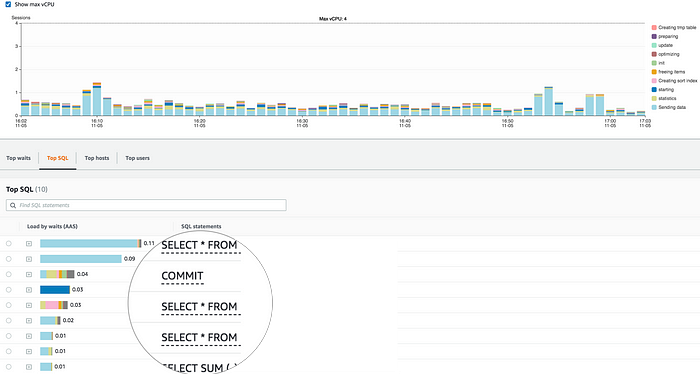

Improving application performance with right-sizing is best done with careful evaluation of the RDS metrics (CPU, memory, IO, network in/out, engine specific metrics, etc.), mapping back to the instance matrix, and iterating on testing to find the sweet spot. Throwing more hardware at the problem is often a go-to solution with instant gains but certainly not a permanent solution in many cases. Specifically when there are issues with the application code or SQL. Identifying those bottlenecks and hotspots, and optimizing your code/SQL will be the key to improve resource utilization and thus performance. For AWS RDS, consider turning on performance insights and enhanced monitoring to get quick insights into your database performance, group top queries by IO waits, users, and hosts.

Some quick pointers for you to consider:

- Replace select * with only the columns you need where possible to improve query performance and IO.

- Believe it or not, this one of the most common pitfalls we run into irrespective of whether we may be using native SQL or an ORM. - Find queries not using indexes and optimize.

- This could have a big impact on memory and CPU utilization. - Review your engine specific metrics and evaluate your query cache strategy.

- Review cache hit ratio and metrics.

- Solutions and recommendations could vary between engines. For instance, turning on query_cache for a high-performance MySQL server is not always considered a best practice and depends on the workload and use-case. - Review index scan metrics.

- Queries performing a disk scan are perfect candidates to optimize. - Review Common Table Expressions — SQL WITH clause. Often these access many more rows than is necessary.

- Use predicate/projection pushdown and sort elimination effectively. - Implement appropriate slow query logging, monitor, and identify hotspots. AWS RDS can export database logs to cloudwatch and is a great way to have all of your database metrics and slow query logs in one place for analysis.

- Use a log analyzer such as pgBadger for PostgreSQL, pt-query-digest for MySQL to help slice and dice your logs to find the top queries you would benefit from optimizing.

- Review UDFs, Triggers, and Stored Procedures for:

- Procedural code, especially loops — can this be replaced by a set-based operation?

- Unnecessary and excessive temp table creation — these can get quite costly in both Memory and IOPs especially if the procedures are called often

- Use of cursors — these are usually unnecessary within a Stored Procedure and can cause DB to process fewer simultaneous queries - Review default parameter groups and tune them for your workloads.

- Any auto-configured parameters, connections, buffers (join/sort/buffer pool), autovacuum configuration for PostgreSQL etc.

- Pay close attention to any thread or connection level parameters as you would want to balance query optimization with resource utilization. - Moving from Amazon RDS to Amazon Aurora can improve performance. Amazon Aurora has 5X the throughput of standard MySQL and 3X the throughput of standard PostgreSQL).

- Aurora charges separately for IOPs, so Before you move to Aurora you should use your IOPs metrics to estimate the IOPs cost. - Regularly, upgrade the database engine to use the latest and greatest frequently to benefit from improvements and patches.

Lowering Cost

With effective monitoring, one could identify and stop any idle RDS instances. This is also something that is easily detected and reported by the AWS Trusted Advisor or the AWS Cost Anomaly Detection (the newest addition to the cost management suite and is under Beta at the moment). If there was a possibility to terminate these, that would even help lower the costs further as we do not need to pay for the EBS volumes. Other strategies such as cutting over to a different instance type or a different instance family also help reduce cost in many cases. For predictable usage patterns, it may also be possible to find time windows (such as overnight or weekends, etc) where you may be able to Scale-In and save on cost.

Reserved Instances can also save you up to 69% over On-Demand rates for instances that must run 24x7x365.

Last (but not least), watch out for new instance families/types and related announcements from AWS which in many cases could translate to a sizeable improvement for performance for the same price or less. For example, AWS just announced m6g and r6g families which provide significantly faster performance at an 11% lower price than their m5 and r5 counterparts.

Right Size RDS Instances

One size does not fit all. Amazon RDS offers several database instance types optimized for memory, compute, storage, and network configurations for your workloads.

Note that EBS optimized and Enhanced Networking options are enabled on all supported RDS instance types at this time.

Amazon RDS allows you to choose between Fixed Performance Instances (e.g. M6g, M5, and R5) and Burstable Performance Instances (e.g. T3).

General Purpose

Burstable

T3 is the least expensive instance family. It runs on shared HW and is a good choice if your workloads are not latency-sensitive and require a lot of CPU in short bursts. Many RDS users choose this instance family in development and test environments. They can be used in production, where latency isn’t a large concern. Choose t3 for lower prices and faster performance.

Fixed performance

M (4, 5, 6g) is available in RDS only and offers a balance of compute, memory, and network resources and is also a great choice for a variety of workloads including sustained usage at a high rate of utilization. Choose M6g to get the best price-performance ratio.

Memory-Optimized

R (4, 5, 6g) instance family has twice the Memory to CPU ratio of the T and M families and it is a good choice for DBs that require a lot of memory but low amounts of CPU. Choose M6g on RDS to get faster performance at a lower cost. R6g is not available on Aurora yet, so choose R5.

X (1, 1e, 1d)] instance family currently supports SQL Server and Oracle on RDS (not available at this time for Amazon Aurora or RDS on MySQL, PostgreSQL, MariaDB) with up to 1,952 GiB of DRAM-based memory, four Intel® Xeon® E7 8880 v3 (codenamed Haswell) processors, and up to 128 vCPUs.

CPU Burst Credits

T3 instances’ baseline performance and ability to burst are governed by CPU Credits. Each T3 instance receives CPU Credits continuously, the rate of which depends on the instance size. T3 instances accrue CPU Credits when they are idle, and use CPU credits when they are active. A CPU Credit provides the performance of a full CPU core for one minute. Think of this as a pool of resources (your CPU credit balance) to draw from when you need them the most. T3 instances are unlimited by default, meaning that, while they won’t be throttled, they can rack up additional costs if you run CPU-hungry workloads. If you run unlimited, and often end up on the higher side for cost, it may be best to consider running fixed performance instances such as R (6g,5) as opposed to burstable and bring in some predictability and lowering costs.

If you do run a burstable instance, it is a best practice to set CloudWatch alarms on your burst balance, More details and burst computation can be found at Burstable Performance Instances.

IOPS and Storage

Amazon RDS volumes are built using Amazon EBS volumes, except for Amazon Aurora, which uses a virtualized storage layer purpose-built for database workloads. A lot of limits we talk about for IOPS do not apply to Amazon Aurora as we are likely to hit throughput limits before we get anywhere close to IOPS limits. Keep an eye on cost though as you pay for every IOP, and high sustained IOPs can get quite expensive. Amazon RDS provides three volume types to best meet the needs of your database workloads:

General Purpose (SSD) also known as GP2 is a good choice for many database workloads. Gp2 volumes have a max throughput of 250 MBs. See below for IOPs limits.

Provisioned IOPS (SSD) also known as io1 volumes offers storage with consistent and low-latency performance and is designed for I/O intensive database workloads. Certainly more expensive compared to GP2 but a great choice if you need that consistent extra performance. Provisioned IOPs have a throughput limit of 1000MBs.

Magnetic is best suited for use-cases such as small data sets and infrequent access. Not recommended for performance-focused workloads.

GP2 IOPs

The gp2 storage type comes with a base IOPS that is a function of the size of the volume. The IOPS for a gp2 volume is the size of the volume in GiB x 3, with a minimum of 100 IOPS and a maximum of 16K IOPS. This is your baseline performance.

Gp2 volumes can burst up to 3000 IOPs for short periods of time. The amount of time you can exceed your baseline performance depends on your burst balance. When your balance drops to zero your performance reverts to the baseline. This is a best practice to set Cloudwatch alerts on your burst balance since a low burst balance can be a predictor of a performance slowdown.

Any gp2 volume larger than 1 TiB, the baseline performance is greater than the burst performance. For such volumes, burst credit is irrelevant because the baseline performance is better than the 3,000 IOPS burst performance. It is often cheaper to increase IOPS by expanding gp2 size than moving to PIOPs. Or if you do use gp2 for storage < 1 TiB, especially in production, it is a best practice to monitor burst balance in addition to latency and other I/O related metrics.

In the dashboard shown above, you can see at a certain workload exceeding baseline performance on RDS based on a 200G volume, out of burst balance and hitting a plateau on the IOPS dropping to a minimum, read/write throughput hitting a plateau, along with a spike on read/write latency and disk queue depth. These are also great metrics to watch and set alerts in your effort to continue to right-size RDS.

Read burst vs baseline performance on RDS and GP2 for a great write up on this topic.

Database Limits

A lot of engine-specific parameters under the RDS database parameter group are auto computed based on a pre-defined algorithm that is dependent on the instance type.

Mysqlmax_connections = {DBInstanceClassMemory/12582880}

innodb_buffer_pool_size = {DBInstanceClassMemory*3/4}PostgreSQLautovacuum_max_workers = GREATEST({DBInstanceClassMemory/64371566592},3)

max_connections = LEAST({DBInstanceClassMemory/9531392},5000)

In a nutshell, the higher the instance class higher the limits.

Note that while these are auto-configured recommendations, these are tunable and something that needs to be carefully evaluated while right-sizing.

Prod vs Pre-Prod

Running an alternate instance type or family in pre-prod (dev/test/stage) environments is quite common. This is useful if you are benchmarking performance against a specific instance type or family. However, If the alternate instance type is due to other reasons such as underutilized environments or lowering cost it could also pose some serious challenges in CI and testing. For example, sustained performance testing at higher workloads often exceeding baseline performance in pre-prod against burstable instance types would result in running out of balance faster than you can accrue. Many RDS users run smaller instances in their DEV and QA environments, but run identical instance types in their performance environments and control cost by turning the performance environment off when not running tests.

Right Size with Better Architecture

A better architecture for your application and database is always a plus and will help with many right-sizing efforts (not limited to RDS). Some key aspects to focus on your journey to better architecture and right-sizing:

- Optimized SQL.

- Connection management.

- Read/Write strategy with cluster endpoint integration.

- Auto-scaling (scale-in/scale-out) including Storage.

- Multi-Az and failover.

- Effective monitoring and alerting.

A good choice to start implementing some of these best practices would be with Amazon Aurora.

Amazon Aurora

Amazon Aurora features a distributed, fault-tolerant, self-healing storage system that auto-scales up to 64TB per database instance. It delivers high performance and availability with up to 15 low-latency read replicas, point-in-time recovery, continuous backup to Amazon S3, and replication across three Availability Zones (AZs).

Cluster endpoint for read/write, Reader endpoint for readOnly, Custom endpoints (Pick your instances and group them under an endpoint) for unique workloads, etc make it a great choice for a variety of application needs. Auto-Scaling the cluster, load balancing read traffic across the Reader cluster instances along with flexible scaling policies (scale-in/scale-out) gives you the horsepower when you need it. Certain Workloads could benefit from Aurora Multi-Master (more than one writer node), but use cases for Multi-Master are narrow. Some workloads could experience deadlocks that would not occur with a single master. Be sure you understand the implications of Multi-Master before considering it for your workload.

https://docs.aws.amazon.com/AmazonRDS/latest/AuroraUserGuide/aurora-multi-master.html

Note: To benefit from Aurora Auto Scaling, applications must support connections to new Aurora Replicas. For capabilities such as transparent read/write splitting, we could use a JDBC driver such as the MariaDB Connector/J utility (Mysql) or middleware such as PGPool-II (PostgreSQL) that provides native support for discovering aurora cluster members, managing connections as well as routing reads/writes to appropriate cluster members.

Amazon Serverless

Amazon Aurora Serverless is an on-demand, auto-scaling configuration for Amazon Aurora (MySQL-compatible and PostgreSQL-compatible editions), where the database will automatically start-up, shut down, and scale capacity up or down based on your application’s needs. It enables you to run your database in the cloud without managing any database instances.

With Aurora Serverless, you optionally specify the desired database capacity (Aurora capacity units or ACUs) range. Aurora Serverless also supports a pause and resume option where the database can be paused after a configured period of inactivity. When the DB cluster is paused, no compute or memory activity occurs (you still pay for storage). Optionally can scale down to zero capacity and helps lower your cost. Your DB will be unavailable from between 5 and 20 seconds when Aurora scales up or down, so it is suitable only for applications (or environments) that are not sensitive to latency spikes.

Applications using connection pooling (connector/j, hikaricp, etc) will need to configure minimum-idle to 0, for taking advantage of zero capacity. For Serverless apps the Data API is a great choice that saves you from the overhead of managing a connection.

Note: Aurora Serverless comes with a pricing model where you pay on a per-second basis for the database capacity that you use which makes it a cost-effective option only for certain categories of applications with infrequent, intermittent, or unpredictable workloads.

Summary

Nothing works better than trying things out and evaluating them yourself. On your journey to right-size RDS, it is highly recommended to be evaluating the application against a few different instance types and families, characterizing application needs against factors discussed in this post (compute, memory, network, cost, etc), benchmarking results for comparison, and making a choice backed by metrics.

It would be pretty awesome if there would be an RDS flavor of the AWS Compute Optimizer or a flavor of the AWS Trusted Advisor available in the near future that uses some AI magic, learns from the data, and workloads on AWS RDS, and predicts the best instance type for your application! In the meantime, implement best practices such as tagging your resources and make good use of tools available in your effort to rightSize such as AWS Cloudwatch, AWS Trusted Advisor, cost management tools such as the Cost Explorer, and AWS Budget. Also, take look at the AWS whitepaper (includes EC2 and many of the suggestions also apply to RDS) on some great tips to lower RDS costs and Right-Sizing!

I hope this post helps with your journey to RightSize AWS RDS!

Stay Safe and Healthy!

Thanks to Andrewmbrand (AWS), Darragh Grace (HMH Engineering), and Francislâiny Campos (HMH Engineering) for their feedback on the draft!